Introduction: The Economics of Efficiency

When OpenAI serves ChatGPT to millions of users, every percentage point of GPU efficiency translates to millions in infrastructure costs. The difference between 10 percent and 40 percent Model FLOPs Utilization (MFU) can determine whether your LLM service is profitable or bleeding money. In the world of large-scale AI deployment, understanding your hardware at the deepest level isn’t just an academic exercise—it’s a business imperative.

This guide reveals the architecture and monitoring techniques that separate amateur deployments from production-grade systems. We’ll explore how modern LLM inference maps to NVIDIA’s revolutionary H100 architecture, dissect the metrics that truly matter, and provide the knowledge needed to achieve the 2-10x performance improvements that industry leaders routinely accomplish.

Since you’re already familiar with the basics of LLM inference from previous discussions, we’ll dive directly into the advanced architectural details and sophisticated monitoring strategies that will transform your understanding of GPU optimization.

LLM Inference Process: A Hardware Perspective

Before we explore the H100’s revolutionary architecture, let’s establish how LLM inference operations map to GPU hardware. This understanding forms the foundation for interpreting the metrics we’ll later use for optimization.

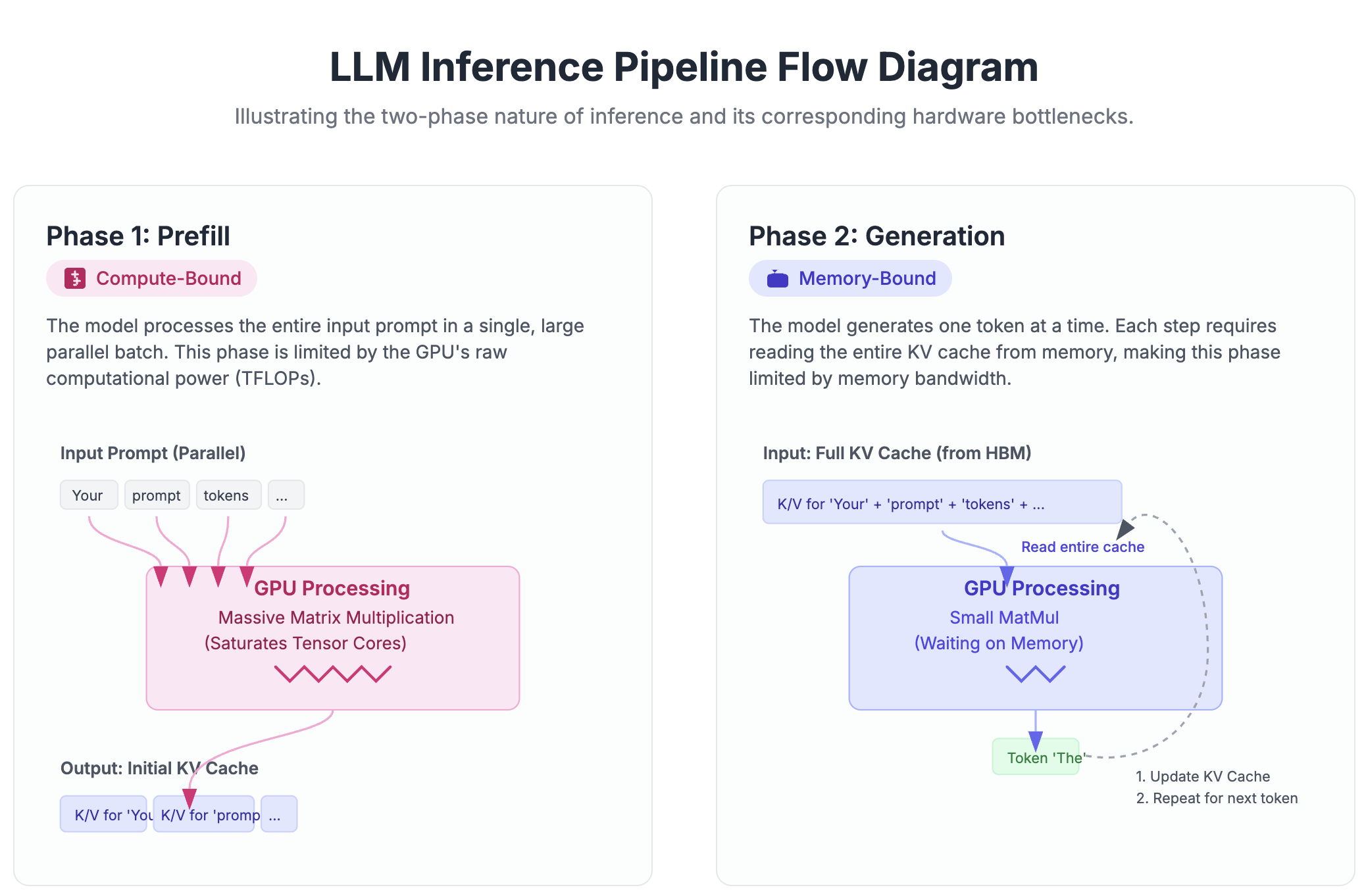

Modern LLM inference consists of two distinct phases that stress different aspects of GPU architecture. The prefill phase, where the model processes the entire input context, is fundamentally compute-bound. During this phase, the model performs massive matrix multiplications across all input tokens simultaneously, creating work that can effectively saturate the GPU’s computational units. In contrast, the generation phase, where tokens are produced one at a time, becomes memory-bound due to the autoregressive nature of the process. Each new token requires accessing the entire key-value cache while performing relatively minimal computation.

The memory transfer operations begin with Host-to-Device (H2D) transfers moving input tokens via PCIe. On modern systems, this means PCIe Gen 4 at 64 GB/s or Gen 5 at 128 GB/s on H100 systems. Once data reaches the GPU, it enters a complex memory hierarchy that we’ll explore in detail in the next section. The efficiency of these transfers often determines the lower bound of inference latency, particularly for smaller models where compute isn’t the bottleneck.

Within the GPU, memory movement follows strict hierarchical patterns. Data flows from global memory (HBM) through various cache levels before reaching the compute units. Understanding this hierarchy is crucial because memory bandwidth, not compute capacity, often becomes the limiting factor in LLM inference performance.

H100 Architecture Deep Dive: Essential Components for LLM Inference

Understanding the H100’s architecture is fundamental to optimizing LLM inference. Each component serves a specific purpose in the complex orchestration of transformer computations. This section provides a comprehensive primer on what each architectural element does and how it contributes to overall system performance.

Streaming Multiprocessors (SMs): The Computational Foundation

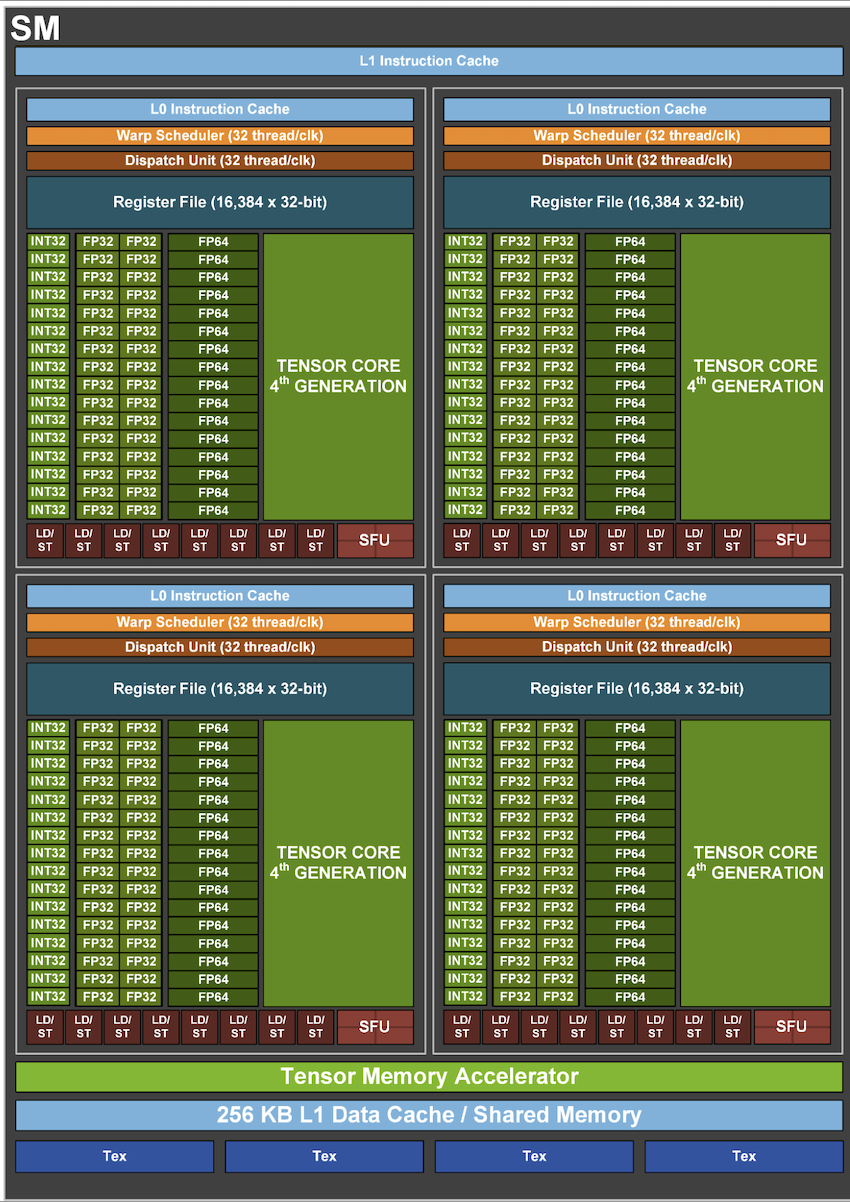

The Streaming Multiprocessor is the fundamental processing unit of the GPU. The H100 contains 132 SMs in its full configuration, each capable of independent instruction execution. Each SM functions as a complete processor with its own instruction cache, schedulers, execution units, and register file.

Within each SM, four warp schedulers manage thread execution. A warp consists of 32 threads that execute in lockstep—when one thread in a warp executes an instruction, all 32 execute the same instruction on different data. Each scheduler can dispatch instructions from a different warp every cycle, enabling the SM to hide memory latency by switching between warps when one stalls.

The SM contains 128 CUDA cores for general-purpose computation, handling integer and single-precision floating-point operations. These cores execute the non-matrix operations in neural networks: activation functions, normalization, element-wise operations, and control flow. The SM also houses 4 Tensor Cores, specialized units that perform matrix multiply-accumulate operations at dramatically higher throughput than CUDA cores.

Each SM includes 256 KB of register file storage, providing ultra-fast temporary storage for thread-local variables. This generous register allocation enables complex kernels to maintain their working set entirely in registers, avoiding slower memory accesses. The register file is banked to allow multiple simultaneous accesses, critical for maintaining throughput when all threads need data simultaneously.

Memory Hierarchy: From Registers to HBM3

The memory system follows a strict hierarchy, with each level trading capacity for speed. Understanding this hierarchy is crucial for inference optimization, as data movement often dominates execution time.

Registers provide the fastest storage at approximately 20 TB/s of aggregate bandwidth per SM. Each thread can access up to 255 registers, with access latency of just one clock cycle. Register allocation happens at compile time, and efficient register use is critical for kernel performance.

Shared Memory and L1 Cache share a 228 KB pool per SM, configurable between different ratios. Shared memory enables threads within a block to communicate and share data with latency of approximately 30 cycles. This memory is banked into 32 banks to enable parallel access—critical for algorithms like Flash Attention that rely on efficient shared memory access patterns.

L2 Cache provides 50 MB of shared storage across all SMs with approximately 6 TB/s of bandwidth. The L2 cache maintains frequently accessed data like model weights and popular activation tensors. Its partitioned design allows multiple SMs to access different cache lines simultaneously without contention.

HBM3 (High Bandwidth Memory) delivers 80 GB of capacity with 3 TB/s of bandwidth through 10 memory controllers. HBM3 uses a 5120-bit wide interface achieved through vertical stacking of memory dies directly on the GPU package. Access latency ranges from 200-300 cycles, making it crucial to hide this latency through parallelism and caching.

Tensor Cores: Matrix Multiplication Acceleration

Tensor Cores are specialized processing units designed exclusively for matrix multiply-accumulate operations, the dominant computation in transformer models. Each Tensor Core can perform a full 4×4 matrix multiplication per clock cycle, delivering dramatically higher throughput than traditional CUDA cores.

The fourth-generation Tensor Cores in H100 support multiple precision formats. FP64 provides full double precision for scientific computing. TF32 (TensorFloat-32) offers the range of FP32 with the precision of FP16, providing a drop-in replacement for FP32 training. FP16 and BF16 (BrainFloat16) enable mixed-precision training and inference. FP8 in two variants (E4M3 and E5M2) doubles throughput while maintaining acceptable accuracy for most transformer operations. INT8 provides further acceleration for quantized inference.

Each Tensor Core operates on small matrix tiles, typically 16×16 or smaller, depending on the precision. The operation D = A × B + C is performed in a single instruction, where A, B, C, and D are matrix tiles. This fused operation eliminates the need to write intermediate results to memory, significantly improving efficiency.

Transformer Engine: Intelligence for Transformer Models

The Transformer Engine is not a physical component but a collection of hardware and software optimizations specifically designed for transformer architectures. It automatically manages numerical precision throughout the network, choosing optimal formats for different operations.

The engine maintains statistics about tensor magnitudes and automatically scales values to maximize precision within the available dynamic range. For attention computations, it might use FP16 for the softmax operation while using FP8 for matrix multiplications. This dynamic precision management happens transparently, requiring no manual intervention while delivering near-FP16 accuracy at FP8 speeds.

The Transformer Engine also includes optimized implementations of common transformer operations. Layer normalization, positional encodings, and attention patterns are accelerated through specialized hardware paths. These optimizations are exposed through libraries like cuBLAS and cuDNN, making them accessible to framework developers.

NVLink and PCIe Interfaces: System Connectivity

The H100 supports both NVLink 4.0 and PCIe Gen5 for system connectivity. NVLink provides 900 GB/s of bidirectional bandwidth (18 links at 50 GB/s each) for GPU-to-GPU communication, essential for model parallelism and multi-GPU inference. The high bandwidth and low latency of NVLink enables treating multiple GPUs almost as a single larger GPU for compatible workloads.

PCIe Gen5 delivers 128 GB/s of bidirectional bandwidth for host communication and storage access. This interface handles model loading, input data transfer, and result retrieval. The increased bandwidth of Gen5 reduces the time spent waiting for data transfer, particularly important for smaller models where transfer time might dominate computation time.

Hardware Schedulers: Orchestrating Execution

Beyond the warp schedulers in each SM, the H100 includes global hardware schedulers that manage work distribution across the GPU. The Gigathread Engine schedules thread blocks to SMs, considering factors like load balancing, cache locality, and resource availability.

The Work Distributor ensures efficient distribution of work across all available SMs, preventing scenarios where some SMs sit idle while others are overloaded. It understands the resource requirements of each kernel and schedules blocks to maximize occupancy while avoiding resource conflicts.

These hardware schedulers operate with sub-microsecond latency, enabling fine-grained scheduling decisions that would be impossible to implement in software. They continuously monitor SM utilization and adjust scheduling decisions dynamically, ensuring optimal resource utilization even with irregular workloads.

Why This Architecture Matters: Each component in the H100 is designed to address specific bottlenecks in transformer inference. The massive register files enable complex kernels, the enhanced memory hierarchy reduces data movement overhead, specialized units like Tensor Cores and TMA accelerate common operations, and intelligent scheduling ensures all resources are effectively utilized. Understanding how these components work together enables developers to write software that fully exploits the hardware’s capabilities.

Model FLOPs Utilization: The North Star Metric

Now that we understand the hardware foundation, we can properly appreciate why Model FLOPs Utilization (MFU) has become the definitive metric for LLM inference efficiency. Unlike simpler metrics that only indicate whether the GPU is busy, MFU measures how effectively we’re using the computational capacity we’ve paid for.

Understanding MFU in Context

Model FLOPs Utilization represents the ratio of achieved computational throughput to theoretical peak hardware throughput. When we report 30 percent MFU, we’re saying that out of the H100’s theoretical 989 TFLOPS of FP16 compute, we’re achieving approximately 297 TFLOPS of useful model computation. The remaining capacity is lost to memory bottlenecks, kernel launch overhead, synchronization, and other inefficiencies.

The fundamental MFU calculation starts with understanding the computational requirements of transformer models. For a forward pass, we need approximately 2 FLOPs per parameter for the feed-forward and projection layers. The attention computation adds a significant number of FLOPs that scales quadratically with the sequence length:

Attention FLOPs per layer ≈ 2 × L_seq² × D_hidden

Definitions:

N_layers: number of transformer layersL_seq: input sequence length (tokens)D_hidden: hidden size (n_heads × d_head)

Note: the constant here (~2) varies by implementation; the key point is the quadratic scaling with L_seq.

This quadratic scaling explains why long context lengths can dramatically impact computational requirements.

The Reality of MFU in Production

The MFU values achieved in production often surprise newcomers to the field. During training, well-optimized systems routinely achieve 40-60 percent MFU because the workload is consistent and batches are large. However, inference presents a different challenge entirely.

During the prefill phase, where the model processes the entire input context, we typically see 30-45 percent MFU. This phase is compute-bound and benefits from the parallel processing of all input tokens. The generation phase tells a different story, with MFU dropping to just 5-15 percent. This dramatic reduction isn’t a sign of poor optimization—it’s a fundamental consequence of autoregressive generation’s memory-bound nature.

Model size significantly impacts achievable MFU. A 7B parameter model might achieve 25-35 percent MFU during prefill and 8-12 percent during generation on a single GPU. Scale up to a 70B model with tensor parallelism, and you might see 35-45 percent prefill MFU but only 4-8 percent during generation. The larger model achieves higher prefill MFU because it better amortizes memory transfer costs, but lower generation MFU because each token requires accessing more parameters.

Why This Matters: Understanding these MFU realities helps set appropriate optimization targets. Achieving 50 percent MFU during generation would require fundamental algorithmic breakthroughs, not just better engineering. Teams should focus on maximizing prefill MFU while accepting that generation will always be memory-bound.

MFU as an Economic Indicator

The direct relationship between MFU and cost makes it invaluable for capacity planning and hardware selection. The cost per token can be expressed as:

Cost per Token = (GPU Cost per Hour × FLOPs per Token) / (MFU × Peak FLOPs)

This relationship means that improving MFU by 10% directly reduces infrastructure costs by 10%.

Cost example (holding throughput constant)

Assumptions: 1,000 H100 GPUs at $3/GPU·hour, MFU improves from 20% → 30%.

Calculation:

GPUs needed ∝ 1 / MFU GPUs_saved = 1000 × (1 - 0.20/0.30) = 333 Hourly_savings = 333 × $3 ≈ $999 ≈ $1,000 per hour Annual_savings ≈ $1,000 × 24 × 365 ≈ $8.8M per yearIn practice, higher MFU often also improves batching and reduces latency, increasing the effective savings.

Hardware Comparison: Effective TFLOPS

A100: $312 \text{ TFLOPS} \cdot 30% \text{ MFU} = 93.6 \text{ effective TFLOPS}$

H100: $989 \text{ TFLOPS} \cdot 25% \text{ MFU} = 247.3 \text{ effective TFLOPS}$

The H100 provides 2.6x more effective compute in this scenario, justifying its premium for compute-intensive workloads.

Comprehensive GPU Metrics: Beyond Simple Utilization

With our understanding of hardware architecture and MFU established, we can now explore the full spectrum of metrics available for monitoring NVIDIA GPUs. Each metric provides a different perspective on system behavior, and understanding their relationships is crucial for effective optimization.

The Hierarchy of Utilization Metrics

GPU utilization, the most commonly cited metric, merely indicates the percentage of time when one or more kernels are executing. A GPU showing 100 percent utilization might be performing useful work efficiently, or it might be spinning in inefficient kernels. This metric alone tells us almost nothing about actual performance.

Streaming Multiprocessor (SM) efficiency provides more insight by measuring how effectively active SMs utilize their resources. This includes warp occupancy (the ratio of active warps to maximum possible warps) and instruction throughput. An SM with high occupancy but low instruction throughput suggests memory bottlenecks, while low occupancy with high throughput might indicate kernel launch overhead.

Memory bandwidth utilization reveals whether we’re constrained by data movement. On an H100, achieving 2.5 TB/s out of 3 TB/s theoretical bandwidth (83 percent utilization) might seem good, but if those transfers are inefficient (non-coalesced, redundant), we’re still wasting resources. The relationship between achieved bandwidth and useful work becomes critical.

Tensor Core Utilization: The Hidden Bottleneck

Tensor Core utilization often becomes the limiting factor in achieving high MFU, yet it’s frequently overlooked. The metric isn’t simply whether Tensor Cores are active, but how efficiently they’re being fed with data and how well the problem dimensions align with hardware requirements.

For optimal Tensor Core utilization, matrix dimensions must align with hardware constraints—multiples of 8 for FP16 operations, 16 for INT8. Misaligned dimensions can reduce utilization by 50 percent or more. The new H100 Transformer Engine alleviates some alignment constraints, but understanding these requirements remains crucial for optimization.

The relationship between Tensor Core utilization and memory bandwidth becomes particularly important during inference. Even with perfect alignment, Tensor Cores can only maintain peak throughput if data arrives fast enough. This creates a careful balance—batch sizes must be large enough to amortize memory transfer costs but small enough to meet latency requirements.

Memory Hierarchy Metrics: Finding the Real Bottleneck

Understanding memory metrics requires thinking hierarchically. L1/shared memory hit rates tell us about kernel efficiency—rates below 80 percent suggest poor data locality. L2 cache hit rates indicate weight reuse effectiveness—critical for models with repeated layer structures. HBM bandwidth utilization reveals whether we’re fundamentally memory-bound.

The introduction of the Memory Bandwidth Utilization (MBU) metric by Databricks provides a complementary view to MFU. MBU measures achieved memory bandwidth versus theoretical peak, helping identify whether computation or memory movement is the limiting factor. When MBU approaches 100 percent while MFU remains low, we know memory bandwidth is the bottleneck.

Cache line efficiency becomes critical in attention mechanisms. The irregular access patterns of key-value caches can waste significant bandwidth if not properly managed. Modern implementations like PagedAttention improve cache line utilization from around 60 percent to over 95 percent, directly translating to higher effective memory bandwidth.

Power and Thermal Metrics: The Overlooked Constraints

Power consumption and thermal behavior significantly impact sustained performance, particularly in dense datacenter deployments. The H100 can consume up to 700W, generating substantial heat that must be managed. Thermal throttling can reduce clock speeds by 30 percent or more, directly impacting achievable MFU.

Dynamic frequency scaling based on workload characteristics means that power-efficient kernels can run at higher clock speeds, improving overall throughput. Understanding the relationship between different operations and power consumption helps in scheduling and workload distribution.

Power Efficiency: TFLOPS per Watt

- H100: $989 \text{ TFLOPS} / 700\text{W} \approx 1.4 \text{ TFLOPS/W}$

- A100: $312 \text{ TFLOPS} / 400\text{W} \approx 0.78 \text{ TFLOPS/W}$

This nearly 2x improvement in power efficiency compounds the H100’s computational advantages.

Bottleneck Analysis: The Mathematics of Performance Limits

Understanding whether your system is compute-bound or memory-bound requires more than just monitoring metrics—it demands understanding the fundamental arithmetic relationships in transformer models. This mathematical framework, combined with architectural knowledge, enables precise bottleneck identification and targeted optimization.

Arithmetic Intensity: The Fundamental Diagnostic Tool

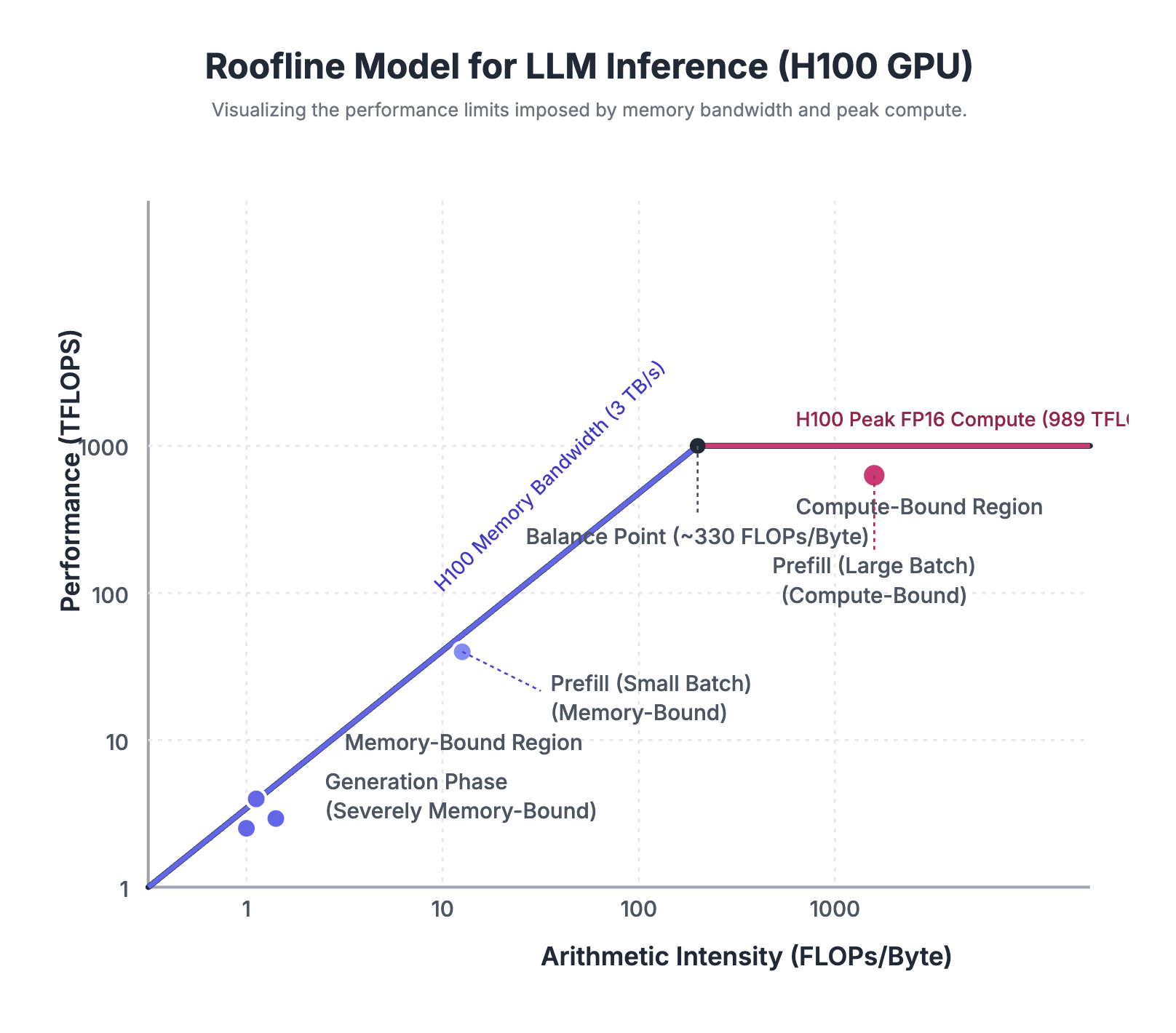

Arithmetic intensity, defined as the ratio of floating-point operations to bytes of memory accessed, provides the key to understanding performance bottlenecks. For any given operation, we can calculate the arithmetic intensity and compare it to the hardware’s balance point—the ratio of peak compute throughput to peak memory bandwidth.

The hardware’s balance point is the ratio of its peak compute throughput to its peak memory bandwidth.

Balance Point = Peak Compute (FLOPS) / Peak Memory Bandwidth (Bytes/s)

Hardware Balance Points (FP16)

- H100: $989 \text{ TFLOPS} / 3000 \text{ GB/s} \approx 330 \text{ Ops/Byte}$

- A100: $312 \text{ TFLOPS} / 1555 \text{ GB/s} \approx 200 \text{ Ops/Byte}$

When a workload’s arithmetic intensity falls below this threshold, it is memory-bound; above, it is compute-bound.

Transformer Arithmetic: Breaking Down the Operations

To apply arithmetic intensity analysis to LLM inference, we must first understand the computational structure of transformers. Each layer consists of two main components: multi-head attention and feed-forward networks, each with distinct computational characteristics.

Here is the corrected breakdown of FLOPs per transformer layer:

Attention FLOPs (per layer):

- QKV Projections:

6BLH² - QK^T Computation:

2BL²H - Attention × V:

2BL²H - Output Projection:

2BLH² - Total Attention:

8BLH² + 4BL²H

FFN FLOPs (per layer):

- Up-projection:

8BLH² - Down-projection:

8BLH² - Total FFN:

16BLH²

Total FLOPs per layer:

Total = (8BLH² + 4BL²H) + 16BLH² = 24BLH² + 4BL²H

Memory access patterns tell a different story. During the prefill phase, we load model weights once but use them for all tokens in the sequence, achieving good arithmetic intensity. During generation, we load the entire model weights to process a single token, resulting in poor arithmetic intensity that decreases with model size.

The Prefill Phase: Compute-Bound Territory

During prefill, when processing an entire input sequence, arithmetic intensity is relatively high. Consider a concrete example with Llama 2 7B processing a 2048-token sequence with batch size 1.

The attention computation performs approximately $2 \cdot 32 \cdot 2048^2 \cdot 4096 \approx 1.1$ trillion FLOPs while accessing roughly 14 GB of memory. This yields an arithmetic intensity of:

AI_prefill = (1.1 × 10¹² FLOPs) / (14 × 10⁹ Bytes) ≈ 75 Ops/Byte

This is well below the H100’s balance point of 330, indicating the workload is memory-bound even during prefill.

However, increasing the batch size dramatically improves arithmetic intensity. With batch size 32, we perform 32× more operations while only marginally increasing memory access (weights are reused across the batch). The arithmetic intensity rises to approximately 2,400 operations per byte, making us solidly compute-bound.

This analysis explains why batch size has such a profound impact on MFU during prefill. Small batches leave the GPU memory-bound despite the parallel processing of many tokens. Only when batch size grows sufficiently large do we transition to compute-bound operation where Tensor Cores can operate near peak efficiency.

The Generation Phase: The Memory Bandwidth Wall

Generation phase arithmetic intensity tells a starkly different story. When generating a single token, we must load the entire model (14 GB for Llama 2 7B) to perform approximately 14 billion operations ($2 \cdot 7B$ parameters). This yields an arithmetic intensity of:

AI_gen = (14 × 10⁹ FLOPs) / (14 × 10⁹ Bytes) = 1 Op/Byte

This is two orders of magnitude below the balance point, confirming generation is severely memory-bound.

The KV-cache access further degrades arithmetic intensity. For each generated token, we must read the cached keys and values for all previous tokens. With a 2048-token context, this means accessing $2048 \cdot 32 \cdot 8192 \cdot 2 = 1.074$ GB (decimal) or 1.0 GiB (binary) of KV-cache data for each token generated. This massive memory access further degrades the arithmetic intensity of the attention computation during generation.

This fundamental mathematical reality explains why generation phase MFU rarely exceeds 15 percent. We’re not failing to optimize; we’re hitting the physical limits of memory bandwidth. No amount of kernel optimization can overcome this arithmetic intensity barrier—only architectural changes like larger caches or algorithmic innovations like speculative decoding can help.

Identifying Your Bottleneck: A Systematic Approach

To determine whether your specific workload is compute-bound or memory-bound, follow this systematic analysis:

First, calculate the theoretical arithmetic intensity for your model and batch size. Using

P = model parameters, B = batch size, N = number of layers, L = sequence length, H = hidden size (≈ n_heads × d_head):

Compute FLOPs (forward pass):

FLOPs_compute ≈ 2·P·B + 4·N·B·L²·H

Memory bytes (FP16, 2 bytes/elem):

Bytes_memory ≈ 2·P + 2·B·L·H·N + 4·B·L·H·N

where the three terms correspond to weights, activations, and KV-cache respectively.

Next, measure your achieved arithmetic intensity using GPU metrics. Let tps be tokens/second and fpt be FLOPs/token; let BW be achieved memory bandwidth (bytes/second):

FLOPs_achieved = tps · fpt

AI_achieved = FLOPs_achieved / BW

Compare your measured arithmetic intensity to the hardware balance point. If it’s below the threshold, you’re memory-bound—focus on reducing memory access through techniques like kernel fusion, quantization, or Flash Attention. If it’s above the threshold, you’re compute-bound—consider using lower precision, pruning, or more efficient algorithms.

The Roofline Model in Practice

The roofline model visualizes these relationships, showing the performance ceiling imposed by either compute or memory bandwidth. The model creates a two-dimensional space where the x-axis represents arithmetic intensity and the y-axis represents achieved performance in FLOPS.

The “roofline” consists of two parts: a sloped line representing the memory bandwidth limit (performance = bandwidth × arithmetic_intensity) and a horizontal line representing the peak compute performance. The intersection point is the balance point we’ve been discussing. Real workloads appear as points in this space, immediately revealing whether they’re compute or memory limited.

For LLM inference, prefill operations typically appear in the middle region, potentially reaching the compute roofline with sufficient batch size. Generation operations cluster far to the left, firmly in memory-bound territory. This visual representation makes optimization opportunities immediately apparent.

Bottleneck-Specific Optimization Strategies

Once you’ve identified your bottleneck, optimization strategies become clear and targeted.

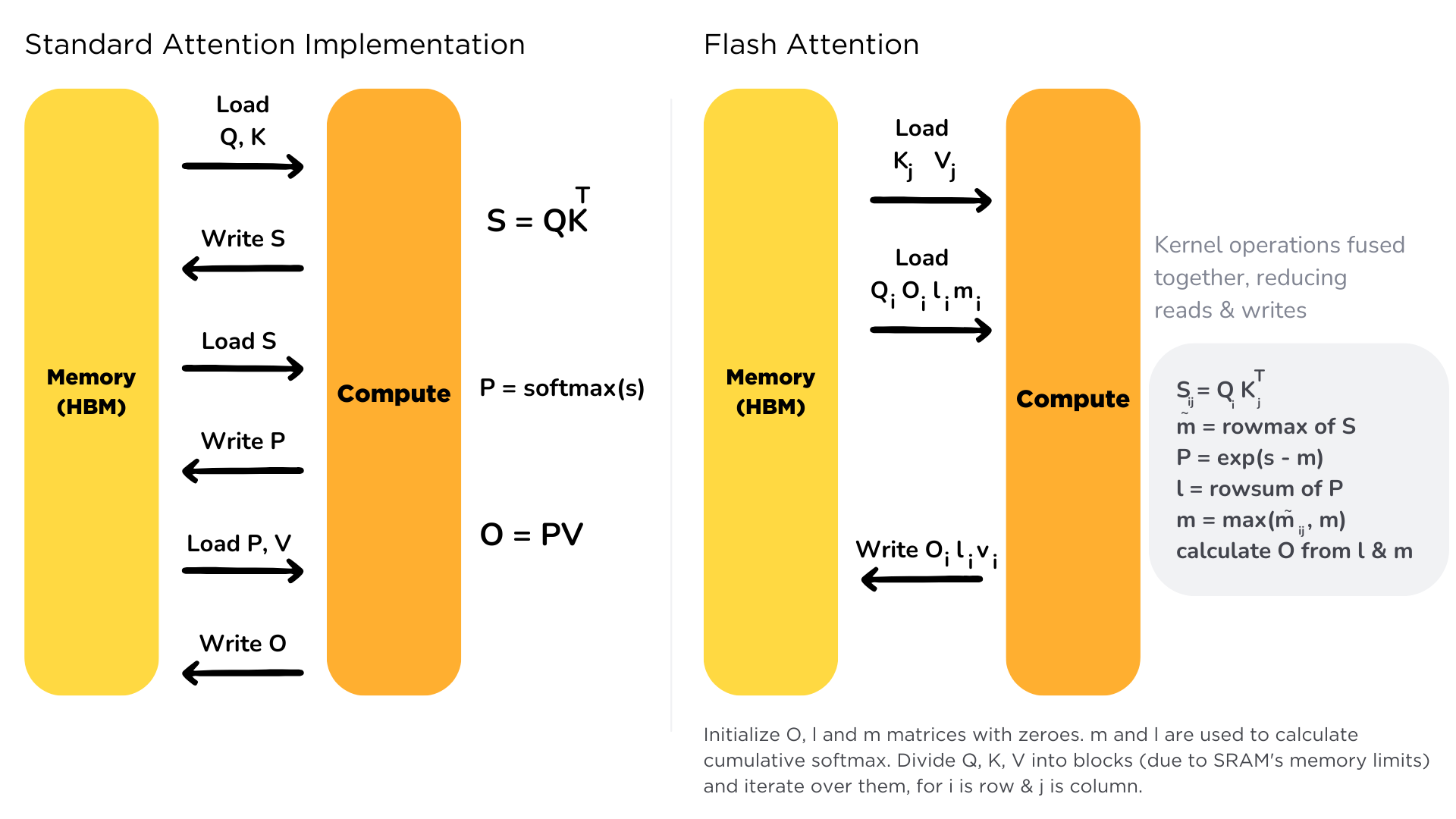

For memory-bound operations, focus on reducing memory traffic. Operator fusion combines multiple operations to avoid intermediate memory writes. Quantization reduces the bytes per parameter, effectively increasing arithmetic intensity. Flash Attention keeps attention computations in shared memory, dramatically reducing HBM access. KV-cache compression techniques reduce the memory footprint of cached attention states.

For compute-bound operations, the strategies differ entirely. Use the highest-throughput precision your accuracy requirements allow—FP8 on H100 can double throughput versus FP16. Ensure tensor dimensions align with Tensor Core requirements (multiples of 8 for FP16, 16 for INT8). Consider structured sparsity to leverage the H100’s sparse Tensor Core operations. Implement better batching strategies to amortize overhead.

The key insight is that optimizing a memory-bound workload for compute efficiency (or vice versa) wastes effort. Understanding your position relative to the roofline model ensures optimization efforts target the actual bottleneck.

Dynamic Bottleneck Behavior

Bottlenecks aren’t static—they shift based on workload characteristics and system state. A system that’s compute-bound with large batches becomes memory-bound with small batches. Long sequences increase the compute requirements of attention quadratically, potentially shifting from memory to compute bound.

Thermal throttling can dynamically reduce compute capacity, shifting the balance point and potentially moving workloads from compute-bound to memory-bound. Understanding these dynamics helps explain performance variations and guides adaptive optimization strategies.

Modern inference systems must handle this dynamism gracefully. Techniques like dynamic batching adjust batch sizes based on queue depth and latency requirements, implicitly navigating the compute-memory tradeoff. Adaptive precision selection can switch between FP16 and FP8 based on whether the system is compute or memory bound.

Case Study: Optimizing a Memory-Bound Workload

Consider a production system serving Llama 2 13B with batch size 1, achieving only 5% MFU during generation. Analysis reveals an arithmetic intensity of 0.8 Ops/Byte—severely memory-bound.

The optimization strategy focuses entirely on memory traffic reduction.

- INT8 Quantization: Halves memory requirements, doubling AI to 1.6 Ops/Byte.

- Flash Attention: Reduces attention-related memory traffic by ~75%.

- Continuous Batching: Increases average batch size to 8, multiplying AI by 8x.

After these optimizations, arithmetic intensity reaches approximately 12 Ops/Byte—still memory-bound but much improved. MFU increases from 5% to 18%, a 3.6x improvement. Further optimization would require architectural changes like model sharding to fit in GPU cache or algorithmic innovations like speculative decoding.

This systematic approach—measure, analyze, identify bottleneck, apply targeted optimization, repeat—transforms random experimentation into engineering discipline. Understanding the mathematical foundations of transformer inference enables predictable, reproducible performance improvements.

Advanced Monitoring Tools and Techniques

The complexity of modern GPU architectures demands sophisticated monitoring tools. Each tool in NVIDIA’s ecosystem serves a specific purpose, from real-time production monitoring to deep kernel-level analysis.

The NVIDIA-SMI Foundation

NVIDIA-SMI, built on the NVIDIA Management Library (NVML), provides the foundation for GPU monitoring. While often dismissed as too basic, it offers several advanced capabilities crucial for production systems. The tool’s ability to continuously monitor with minimal overhead (less than 1 percent performance impact) makes it ideal for always-on production monitoring.

The key to effective nvidia-smi usage lies in understanding its sampling behavior. Utilization metrics are sampled over 1/6 second intervals, meaning short-duration kernels might be missed entirely. Memory bandwidth measurements aggregate over one-second windows, potentially hiding burst behavior. Understanding these limitations helps interpret the data correctly.

Advanced nvidia-smi features include event-triggered logging, which can capture detailed state information when specific conditions occur, and persistence mode management, which keeps the GPU driver loaded to reduce kernel launch latency. The tool’s ability to set and monitor power caps enables dynamic power management strategies that balance performance with thermal constraints.

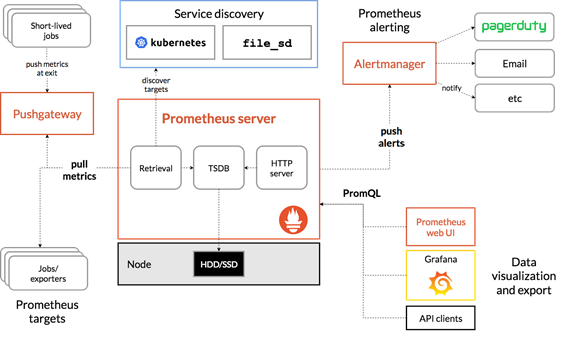

DCGM: Datacenter-Scale Monitoring

The Data Center GPU Manager (DCGM) extends monitoring capabilities to fleet scale while providing more detailed metrics than nvidia-smi. Its architecture, with a central daemon managing data collection and client libraries for access, enables efficient monitoring of hundreds of GPUs with minimal overhead.

DCGM’s field-based metric system provides over 100 distinct metrics, each identified by a unique field ID. For LLM inference, critical fields include DCGM_FI_PROF_SM_ACTIVE (1002) for SM utilization, DCGM_FI_PROF_PIPE_TENSOR_ACTIVE (1004) for Tensor Core activity, and DCGM_FI_PROF_DRAM_ACTIVE (1005) for memory interface utilization.

The profiling metrics available through DCGM provide insights impossible to obtain through nvidia-smi. These include instruction-level throughput, cache hit rates, and detailed memory access patterns. The ability to correlate these metrics across multiple GPUs reveals system-level bottlenecks that individual GPU monitoring might miss.

Nsight Systems: Application-Level Profiling

Nsight Systems provides a timeline view of application execution, revealing the interplay between CPU and GPU operations. For LLM inference, this exposes critical inefficiencies like CPU-GPU synchronization bottlenecks, unnecessary memory transfers, and kernel launch overhead.

The tool’s ability to trace CUDA API calls, kernel executions, and memory transfers simultaneously creates a complete picture of application behavior. Custom NVTX markers can annotate different phases of inference (tokenization, prefill, generation), making performance analysis more intuitive.

The overhead of Nsight Systems (typically 5-20 percent) makes it unsuitable for production monitoring but invaluable for development optimization. The visual timeline immediately reveals problems like serialized operations that could run concurrently or gaps between kernels indicating scheduling inefficiencies.

Optimization Techniques and Their Metric Signatures

Modern LLM inference optimization employs sophisticated techniques that produce distinctive patterns in GPU metrics. Understanding these signatures enables rapid diagnosis and systematic improvement.

Flash Attention: Transforming Memory Access Patterns

Flash Attention revolutionizes attention computation by keeping intermediate results in shared memory rather than writing to HBM. This fundamental change produces distinctive metric signatures that confirm proper implementation.

When Flash Attention is working correctly, HBM bandwidth utilization during attention computation drops by 50-80 percent while SM utilization increases. L1/shared memory throughput increases dramatically, often exceeding 10 TB/s aggregate across all SMs. The MFU during attention phases can improve by 1.5-2x, though this improvement is most pronounced for longer sequences where memory bandwidth typically dominates.

The H100’s larger shared memory (228 KB per SM) enables larger tile sizes than previous generations, reducing the number of passes required. Combined with the TMA’s ability to asynchronously load the next tile while computing the current one, this can achieve near-perfect overlap of memory and computation.

Continuous Batching: Dynamic Resource Utilization

Continuous batching replaces static batches with dynamic scheduling, allowing requests of different lengths to process together. This technique produces characteristic saw-tooth patterns in GPU utilization metrics as batches naturally grow and shrink.

Effective continuous batching maintains average GPU utilization above 70 percent while keeping variance below 20 percent. The queue depth typically runs at 1.5-2x the optimal batch size, providing a buffer for arrival rate variations. Memory fragmentation should remain below 5 percent, indicating efficient memory management.

The impact on MFU is substantial—typically improving average MFU by 20-40 percent by maintaining consistent GPU saturation. The technique is particularly effective for services with variable request rates, where static batching would either waste resources or introduce unnecessary latency.

PagedAttention: Memory Efficiency Revolution

PagedAttention applies virtual memory concepts to KV-cache management, storing attention caches in non-contiguous blocks. This produces distinctive memory utilization patterns that confirm proper operation.

Memory utilization with PagedAttention exceeds 95 percent compared to around 60 percent for naive allocation. Block utilization metrics should show over 90 percent of allocated blocks actively used. The technique enables 2-4x larger effective batch sizes with the same memory, directly improving throughput.

The metric signatures include steady memory allocation rates (rather than large chunks), consistent block recycling patterns, and high cache hit rates for shared prefixes. When combined with continuous batching, PagedAttention enables near-optimal memory utilization while maintaining low latency.

Quantization: Precision-Performance Tradeoffs

Quantization techniques produce clear changes in metric patterns that indicate their effectiveness. FP16 to INT8 quantization typically doubles Tensor Core throughput while halving memory bandwidth requirements. The H100’s FP8 support can achieve similar improvements with minimal accuracy loss.

Successful quantization shows Tensor Core utilization increasing proportionally with the precision reduction (2x for FP16→INT8). Memory bandwidth utilization decreases by the same factor, often relieving memory bottlenecks. MFU improvements vary but typically range from 1.4-1.9x for compute-bound phases.

The key metric to watch is the balance between compute and memory utilization. Quantization can shift a memory-bound workload to compute-bound, fundamentally changing optimization strategies. This shift appears as increased SM efficiency and decreased memory controller activity.

Speculative Decoding: Trading Compute for Latency

Speculative decoding uses a smaller “draft” model to predict multiple tokens, then validates them with the full model. This produces unique metric patterns: burst compute activity during speculation followed by validation phases.

Effective speculative decoding shows acceptance rates above 60 percent, meaning most speculated tokens are correct. The compute utilization pattern shows characteristic dual-phase behavior—low utilization during drafting, high during validation. Overall MFU might decrease, but time-to-token improves by 2-3x when properly tuned.

The memory access patterns reveal the technique’s efficiency. The draft model’s weights should remain L2-resident, showing high cache hit rates. The validation phase should show coalesced memory access as multiple tokens validate simultaneously.

Production Deployment Best Practices

Transitioning from optimization in development to production deployment requires systematic approaches to monitoring, alerting, and continuous improvement.

Establishing Baseline Metrics

Before optimization, establish comprehensive baselines for your specific models and hardware. These baselines should include MFU for both prefill and generation phases, memory bandwidth utilization across different batch sizes, latency percentiles (p50, p95, p99) for various sequence lengths, and power consumption under sustained load.

Baseline establishment should span at least one week of production traffic to capture variations. Daily patterns, weekend differences, and special events all impact metric distributions. Understanding normal variation prevents false alerts and helps identify genuine problems.

The baseline must differentiate between model architectures. A 7B parameter model baseline differs substantially from a 70B model baseline, even on identical hardware. Separate baselines for different operation modes (batch inference, streaming, interactive) prevent inappropriate comparisons.

Implementing Effective Alerting

Alert fatigue destroys operational effectiveness, so alerts must be both actionable and important. Critical alerts should trigger only for service-impacting conditions: MFU dropping below 50 percent of baseline for sustained periods, memory utilization exceeding 95 percent with allocation failures, or thermal throttling reducing clock speeds.

Warning-level alerts identify degradation before it impacts service: MFU variance exceeding 20 percent over five-minute windows, queue depths growing beyond 2x normal, or power consumption approaching thermal design limits. These alerts enable proactive intervention.

Informational monitoring tracks optimization opportunities without generating alerts: batch size efficiency below target, quantization candidates based on compute patterns, or scheduling inefficiencies revealed by utilization gaps. Regular review of these metrics drives continuous improvement.

Continuous Optimization Workflows

Production systems require continuous optimization as models, traffic patterns, and requirements evolve. Establish weekly metric reviews comparing current performance to baselines and identifying degradation or improvement opportunities.

A/B testing frameworks should include metric collection for both control and experiment groups. Beyond functional metrics like accuracy, collect detailed performance metrics to understand the full impact of changes. A model change that improves accuracy but degrades MFU by 30 percent might not be worth deploying.

Capacity planning must account for metric trends. If MFU gradually degrades as model complexity increases, infrastructure requirements grow super-linearly. Understanding these relationships enables accurate forecasting and budget planning.

Multi-Tenant Optimization Strategies

Production systems rarely serve single models in isolation. Multi-tenant scheduling must balance resource utilization with quality of service, creating complex optimization challenges.

GPU sharing strategies depend on workload characteristics. Time-slicing works well for similar models with predictable resource requirements. Multi-Instance GPU (MIG) provides hardware isolation but reduces flexibility. Spatial sharing requires careful memory management to prevent interference.

Metric collection in multi-tenant environments requires attribution to specific tenants. Per-model MFU tracking reveals which models efficiently use resources. Memory attribution prevents one model from starving others. Power consumption tracking enables accurate cost allocation.

The scheduling algorithm must consider both immediate and future resource availability. Greedy scheduling might achieve high instantaneous utilization but create future bottlenecks. Predictive scheduling based on historical patterns improves overall system efficiency.

Future Directions and Emerging Patterns

The landscape of LLM inference optimization continues evolving rapidly. Understanding emerging patterns helps prepare for future developments.

Algorithmic Innovations

Attention mechanism improvements continue emerging. Techniques like Linear Attention and Performer reduce complexity from O(n²) to O(n), fundamentally changing the computational requirements. While these haven’t yet matched traditional attention’s quality, rapid progress suggests breakthrough potential.

Mixture of Experts (MoE) architectures enable larger models without proportional compute increases. By activating only relevant experts for each token, MoE models achieve effective parameter counts far exceeding dense models while maintaining manageable computational requirements. The metric patterns for MoE models differ substantially, requiring new optimization approaches.

Retrieval-augmented generation (RAG) shifts computation from parameter storage to dynamic retrieval. This architectural change produces different bottlenecks—network I/O and database access rather than GPU memory bandwidth. Understanding these patterns becomes crucial as RAG adoption increases.

Software Framework Evolution

The competition between inference frameworks drives rapid innovation. vLLM’s PagedAttention, TensorRT-LLM’s kernel fusion, and DeepSpeed’s pipeline parallelism each offer unique advantages. Framework selection significantly impacts achievable metrics—the same model might achieve 30 percent MFU with one framework and 45 percent with another.

Automatic optimization techniques reduce the expertise required for high performance. Compilers that automatically select optimal kernel implementations, batch sizes, and parallelism strategies democratize optimization. However, understanding underlying metrics remains crucial for pushing beyond automatic optimization limits.

The convergence of training and inference frameworks simplifies deployment but introduces complexity. Frameworks must now optimize for both phases, with different requirements and bottlenecks. This convergence produces new metric patterns that require careful interpretation.

Conclusion: The Path to Excellence

Mastering GPU monitoring for LLM inference requires deep understanding of hardware architecture, comprehensive metric collection, and systematic optimization approaches. The H100’s revolutionary architecture provides unprecedented capability, but realizing its potential demands expertise in interpreting complex metric relationships and applying appropriate optimization techniques.

The journey from basic GPU utilization monitoring to sophisticated MFU optimization transforms both system performance and economics. Organizations that master these techniques achieve 2-10x performance improvements, directly impacting service quality and operational costs.

Remember that MFU is not just a metric—it’s a philosophy of efficiency that permeates every aspect of LLM deployment. By understanding the intricate dance between compute and memory, between hardware capability and algorithmic requirements, we can build inference systems that deliver breakthrough performance at sustainable costs.

The future of LLM inference belongs to those who can see beyond surface-level metrics to understand the deep patterns of GPU behavior. Armed with the knowledge in this guide, you’re equipped to join the ranks of teams achieving world-class inference performance. The difference between amateur and professional deployment isn’t just knowledge—it’s the systematic application of that knowledge to continuously improve and optimize.

References

- NVIDIA H100 Architecture: NVIDIA. (2022). NVIDIA H100 Tensor Core GPU Architecture: The Engine of the World’s AI Infrastructure. NVIDIA Whitepaper.

- Attention Is All You Need: Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention Is All You Need. arXiv preprint arXiv:1706.03762.

- FlashAttention-2: Dao, T. (2023). FlashAttention-2: Faster Attention with Better Parallelism and Work Partitioning. arXiv preprint arXiv:2307.08691.

- CUDA MODE (Flash Attention 2): Mills, C. (2023). CUDA MODE - Lecture 12 - Flash Attention 2. christianjmills.com.

- PagedAttention (vLLM): Kwon, M., Li, Z., Zhuang, S., Kedia, R., Li, C., Ma, X., … & Zaharia, M. (2023). Efficient Memory Management for Large Language Model Serving with PagedAttention. arXiv preprint arXiv:2309.06180.

- Roofline Model: Williams, S., Waterman, A., & Patterson, D. (2009). Roofline: An Insightful Visual Performance Model for Multicore Architectures. Communications of the ACM, 52(4), 65-76.

- Speculative Decoding: Leviathan, Y., Kalman, M., & Matias, Y. (2022). Fast Inference from Transformers via Speculative Decoding. arXiv preprint arXiv:2211.17192.

- Transformer Inference Arithmetic: Kippley, T. (2023). Transformer Inference Arithmetic. kipp.ly.

- The Transformer Inference Guide: Baseten. (2023). The Full Guide to Transformer Model Inference. Baseten Blog.

- LLM Inference Performance Engineering: Databricks. (2023). LLM Inference Performance Engineering: Best Practices. Databricks Blog.

- Scaling Deep Learning on GPUs: The JAX Authors. (2024). Scaling Deep Learning. jax-ml.github.io.

As models grow larger and demands increase, the importance of efficient inference will only intensify. The techniques and understanding developed today will compound, creating sustainable competitive advantages for organizations that invest in deep technical excellence. The path to that excellence begins with understanding your hardware, measuring what matters, and relentlessly optimizing based on data-driven insights.